Professional Open Source: Extend-Only Design

The only generic versioning strategy that stands the test of time.

This post marks the third one I’ve written this year about versioning problems in open source software development, although they apply to anyone who develops shared software components:

- “Professional Open Source: Maintaining API, Binary, and Wire Compatibility”

- “Practical vs. Strict Semantic Versioning”

In this post I’m going to introduce a battle-tested versioning strategy for APIs, wire formats, and storage formats: extend-only design.

However, why so many posts about a subject as esoteric as software versioning? Because it’s an under-appreciated and extremely difficult software problem. The best practitioners of versioning / inter-version compatibility work for companies like Microsoft, Oracle, and Apple on large software platforms and they’re trained on how to do it early on. For developers who are creating internal middleware for their non-FAANG companies or OSS libraries these practices usually have to be learned the hard way.

Where Do “Breaking Changes” Come From?

“Professional Open Source: Maintaining API, Binary, and Wire Compatibility” touches on this subject at length, but let’s summarize:

A breaking change occurs when a previously available format, API, or behavior is removed or changed in an incompatible way.

Some examples:

- Breaking Formatting Change: a new version of a desktop app is released, but it can’t open files saved by the previous version due to a change in the file format.

- Breaking Behavior Change: a change in the default supervision strategy of Akka.NET causes actors to all self-terminate instead of restart - applications that depended upon the previous default behavior are likely now broken.

- Breaking API Change: a public method in a previous version of Akka.NET is removed and all plugins that depended upon it can no longer compile or throw a

MissingMethodException.

These have tremendous real-world impact on end-users. Conservative business users are slow to upgrade their dependencies precisely because they are so terrified of running into these types of problems - hence why platform producers like Microsoft have made backwards-compatibility their religion.

All of these breaking changes have one thing in common: something that existed in the past was irrecoverably altered in a future release, and thus broke the downstream applications and libraries that depended upon that prior functionality.

The Complexity of Version Management

Here’s the complex, and thus tricky part of breaking changes: your future and past software releases all exist concurrently. Adoption is entirely consumer-driven activity which naturally lags behind releases, a producer-driven activity. Thus software producers have to reason about how to support multiple versions of their software concurrently.

This is a deceptively complicated problem that most OSS producers don’t address because it can be expensive and hard. Hand-waving away users’ upgradability concerns with an answer like “we use SemVer” is… Unprofessional at least.

The problems with designing systems to be compatible over many successive versions usually come down to being too permissive with changes between versions - we’ve recently had some issues with Akka.NET where I approved some API changes that I shouldn’t have and the result was having to rollback those changes in a rapid, second release.

What I’m going to propose here is a robust, time-tested solution for versioning any type of software system over time using extend-only design.

The Pillars of Extend-Only Design

I haven’t been able to find concrete evidence of when “extend-only design” as we know it began exactly, but based on my conversations with late 1970s / early 1980s software developers the practice was popularized for the purpose of file format preservation back in the era of the mainframe computing - making it possible for binary artifacts produced with a previous version of the software to be consumed and reused in future versions of the software.

The pillars of extend-only design apply to any and all software systems, and they are thus:

- Previous functionality, schema, or behavior is immutable and not open for modification. Anything you made available as a public release lives on with its current behavior, API, and definitions and isn’t able to be changed.

- New functionality, schema, or behavior can be introduced through new constructs only and ideally those should be opt-in.

- Old functionality can only be removed after a long period of time and that’s measured in years.

How do these resolve some of the frustrating problems around versioning?

- Old behavior, schema, and APIs are always available and supported even in newer versions of the software;

- New behavior is introduced as opt-in extensions that may or may not be used by the code; and

- Both new and old code pathways are supported concurrently.

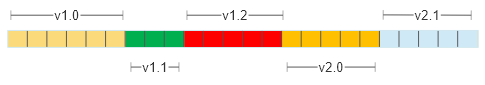

Consider the format of a file as an array of bytes:

Imagine for a moment that the parts of the byte format introduced in v1.0 of the software contain the core data attributes needed to use the software, but in each successive release of the software new features were gradually introduced while older ones may have been deprecated. Despite all of that the file format is capable of describing all of the features and attributes in all versions of the software. It’s up to the newest versions of the program to be able to read the oldest versions, using something like the adapter pattern or the strategy pattern to determine which parts of the format are used and how are those translated into the modern format. Desktop applications like Microsoft Office can do this for files that are decades old.

Too abstract? Let’s use the example of SQL schema to demonstrate.

Use Case: SQL Schema

We’re building a web app with forms-based authentication.

Table: Users

| int UserId | nvarchar(50) UserName | nvarchar(255) Email | byte(50) HashedPassword |

|---|---|---|---|

| 1 | TheAaron | [email protected] | 0xFF11EE00 |

| 2 | Ardbeg | [email protected] | 0xCA1DFFCB |

We decide that this schema isn’t quite robust enough to build a proper authentication system, so we decide to make the following changes:

- Our RDBMS, “FakeDb,” introduces a new native

Passwordschema type that supports native one-way hashing and a whole host of native functions designed to help store passwords safely - we decide that we want to use that instead of running home-rolled app-level hashing. - We want to add support for users to have multiple email addresses, which will require adding a second table and some foreign key relationships to define a primary key.

Without Extend-Only Design

We decide to do what 80% of web app developers do: break the schema with a migration change concurrent with our web deployment.

CREATE TABLE User_Emails(

UserId int,

IsPrimary bool default true,

Email nvarchar(255) not null,

PRIMARY KEY(UserId, Email),

FOREIGN KEY(UserId) REFERENCES Users(UserId)

);

--populate new table

SELECT Users.UserId, Users.Email

INTO User_Emails

FROM Users;

ALTER TABLE Users DROP COLUMN Email;

-- can the password type; all users will be forced to recreate their passwords

-- via new application code being pushed concurrently with this rollout

ALTER TABLE Users MODIFY HashedPassword Password;

This T-SQL accomplishes the objectives we set forth in our scenario and yes, I see this often when reviewing real production code from companies big and small. Why are these changes done this way? Several reasons:

- An increasingly large proportion of SQL code isn’t written by hand anymore - it’s generated by tooling, and yes it does engage in destructive T-SQL generation just like this often unbeknownst to the developers;

- Developers generally aren’t careful about versioning, for all of the reasons I described earlier; and

- It’s assumed that the transactional atomicity and consistency guarantees of RDBMS engines will save you in the event that something goes wrong.

In other words: this is an extremely lazy and thus very common approach to versioning database schema.

So what’s dangerous about it?

- Involves a live migration of data on a production database concurrent with the deployment;

- Rollbacks can’t be done quickly as that would involve rolling the live migration back in the other direction;

- Immediately invalidates all user passwords and forces them to recreate them, which can have a lingering negative impact on important business metrics like the number of daily active users; and

- If other applications require read access to this area of the database, they too must be updated as the old schema for user authentication will no longer run correctly as a result of the

PasswordandEmailfields being irrevocably altered.

Fundamentally, this code is unnecessarily destructive to both data and schema. We could very easily make some changes here that allow these changes to be introduced with extend-only design.

With Extend-Only Design

Extend-only design requires some more planning and perhaps some more flexibility on the application-programming side of things in this scenario, but the results are drastically improved:

CREATE TABLE User_Emails(

UserId int,

IsPrimary bool default true,

Email nvarchar(255) not null,

PRIMARY KEY(UserId, Email),

FOREIGN KEY(UserId) REFERENCES Users(UserId)

);

--populate new table; this is not necessary

SELECT Users.UserId, Users.Email

INTO User_Emails

FROM Users;

-- don't modify existing column, add a new optional one

ALTER TABLE Users ADD NewHashedPassword Password default null;

What did we do differently here?

- Kept the existing

Users.Emailfield, which can still be used by older versions of the software (i.e. other services that read from the same database) - and we have the option to keep populating that record duringINSERTs in order to maintain backwards compatibility and - Added a new, optional

Users.NewHashedPasswordfield that uses the updated datatype - so our application can keep reading from the previousUsers.HashedPasswordrather than force all users to reset their password at the same time.

And what are the advantages of this approach?

- SQL schema can be deployed prior to the application itself as the old schema is still preserved;

- Rollbacks don’t require a second database deployment;

- Services can be deployed in arbitrary orders since v1 schema is still supported by newer versions of the software; and

- No data is destroyed.

Disadvantages?

- V2 application code requires more branching - have to check if the newer field / tables are populated and if not, fall back to older schema;

- Requires more planning - for instance, we might have to accommodate staggered service upgrades by writing to both the

User_Emailstable as well as theUsers.Emailfield across several releases of the software; and - Results in steady schema + data growth over time, since we never throw anything away.

On balance, what we gain is a predictable versioning scheme that allows older versions of the software to operate without any interruption and allows newer versions of the software to cooperate with the old. And the trade-offs are minor - mostly they require developers to spend some time planning prior to coding.

Extend-Only Design with APIs

Just to make things explicit, how do we apply extend-only design to APIs in shared libraries?

Imagine we have the following Serializer base class in Akka.NET:

public abstract class Serializer

{

internal static string GetErrorForSerializerId(int id) => SerializerErrorCode.GetErrorForSerializerId(id);

/// <summary>

/// The actor system to associate with this serializer.

/// </summary>

protected readonly ExtendedActorSystem system;

private readonly FastLazy<int> _value;

/// <summary>

/// Initializes a new instance of the <see cref="Serializer" /> class.

/// </summary>

/// <param name="system">The actor system to associate with this serializer. </param>

protected Serializer(ExtendedActorSystem system)

{

this.system = system;

_value = new FastLazy<int>(() => SerializerIdentifierHelper.GetSerializerIdentifierFromConfig(GetType(), system));

}

/// <summary>

/// Completely unique value to identify this implementation of Serializer, used to optimize network traffic

/// Values from 0 to 16 is reserved for Akka internal usage

/// </summary>

public virtual int Identifier => _value.Value;

/// <summary>

/// Returns whether this serializer needs a manifest in the fromBinary method

/// </summary>

public abstract bool IncludeManifest { get; }

/// <summary>

/// Serializes the given object into a byte array

/// </summary>

/// <param name="obj">The object to serialize </param>

/// <returns>A byte array containing the serialized object</returns>

public abstract byte[] ToBinary(object obj);

/// <summary>

/// Serializes the given object into a byte array and uses the given address to decorate serialized ActorRef's

/// </summary>

/// <param name="address">The address to use when serializing local ActorRef´s</param>

/// <param name="obj">The object to serialize</param>

/// <returns>TBD</returns>

public byte[] ToBinaryWithAddress(Address address, object obj)

{

return Serialization.WithTransport(system, address, () => ToBinary(obj));

}

/// <summary>

/// Deserializes a byte array into an object of type <paramref name="type"/>.

/// </summary>

/// <param name="bytes">The array containing the serialized object</param>

/// <param name="type">The type of object contained in the array</param>

/// <returns>The object contained in the array</returns>

public abstract object FromBinary(byte[] bytes, Type type);

/// <summary>

/// Deserializes a byte array into an object.

/// </summary>

/// <param name="bytes">The array containing the serialized object</param>

/// <returns>The object contained in the array</returns>

public T FromBinary<T>(byte[] bytes) => (T)FromBinary(bytes, typeof(T));

}

I want to introduce a version of the Serializer API that can accept a Memory<T> so we can take advantage of memory pooling inside our networking and persistence pipelines. Could we use extend-only API design to safely introduce these capabilities to Akka.NET without introducing any binary incompatibilities?

This class is used heavily inside multiple parts of Akka.NET as well as end-user code, so it’s a sufficiently non-trivial example.

Here’s my quick and dirty attempt at implementing extend-only design:

public abstract class Serializer

{

internal static string GetErrorForSerializerId(int id) => SerializerErrorCode.GetErrorForSerializerId(id);

/// <summary>

/// The actor system to associate with this serializer.

/// </summary>

protected readonly ExtendedActorSystem system;

private readonly FastLazy<int> _value;

protected readonly MemoryPool<byte> _sharedMemory;

/// <summary>

/// Initializes a new instance of the <see cref="Serializer" /> class.

/// </summary>

/// <param name="system">The actor system to associate with this serializer. </param>

protected Serializer(ExtendedActorSystem system) : this(system, MemoryPool<byte>.Shared)

{

}

/// <summary>

/// Initializes a new instance of the <see cref="Serializer" /> class.

/// </summary>

/// <param name="system">The actor system to associate with this serializer. </param>

protected Serializer(ExtendedActorSystem system, MemoryPool<byte> sharedMemory)

{

this.system = system;

_sharedMemory = sharedMemory;

_value = new FastLazy<int>(() => SerializerIdentifierHelper.GetSerializerIdentifierFromConfig(GetType(), system));

}

/// <summary>

/// Completely unique value to identify this implementation of Serializer, used to optimize network traffic

/// Values from 0 to 16 is reserved for Akka internal usage

/// </summary>

public virtual int Identifier => _value.Value;

/// <summary>

/// Returns whether this serializer needs a manifest in the fromBinary method

/// </summary>

public abstract bool IncludeManifest { get; }

/// <summary>

/// Serializes the given object into a byte array

/// </summary>

/// <param name="obj">The object to serialize </param>

/// <returns>A byte array containing the serialized object</returns>

public abstract byte[] ToBinary(object obj);

/// <summary>

/// Serializes the given object into a byte array

/// </summary>

/// <param name="obj">The object to serialize </param>

/// <returns>A byte array containing the serialized object</returns>

public virtual IMemoryOwner<byte> ToBinaryMemory(object obj)

{

var bin = ToBinary(obj);

var memory = _sharedMemory.Rent(bin.Length);

bin.CopyTo(memory.Memory);

return memory;

}

/// <summary>

/// Serializes the given object into a byte array and uses the given address to decorate serialized ActorRef's

/// </summary>

/// <param name="address">The address to use when serializing local ActorRef´s</param>

/// <param name="obj">The object to serialize</param>

/// <returns>TBD</returns>

public byte[] ToBinaryWithAddress(Address address, object obj)

{

return Serialization.WithTransport(system, address, () => ToBinary(obj));

}

/// <summary>

/// Deserializes a byte array into an object of type <paramref name="type"/>.

/// </summary>

/// <param name="bytes">The array containing the serialized object</param>

/// <param name="type">The type of object contained in the array</param>

/// <returns>The object contained in the array</returns>

public abstract object FromBinary(byte[] bytes, Type type);

public virtual object FromBinary(Memory<byte> bytes, Type type)

{

return FromBinary(bytes.ToArray(), type);

}

/// <summary>

/// Deserializes a byte array into an object.

/// </summary>

/// <param name="bytes">The array containing the serialized object</param>

/// <returns>The object contained in the array</returns>

public T FromBinary<T>(byte[] bytes) => (T)FromBinary(bytes, typeof(T));

}

What have we done here?

- We’ve preservered our previous

abstractbehaviors which are overridden in subclasses, including ones defined in our users’ solutions as well as third-party plugins; - We’ve added an overloaded constructor which accepts an

MemoryPool<byte>- and the old constructor is still available, which defaults toMemoryPool<byte>.Shared; and - We’ve added two new

virtualmethods which piggy-back on top of our previous API contracts and both return or consume a newMemory<byte>orIMemoryOwner<byte>, which new versions of Akka.NET can begin using in order to take advantage of pooling.

None of the new Serializer methods are called by default yet, so the previous behavior is still preserved across the board, and thus it will be safe for older versions of the software to upgrade to this version of Akka.NET as the behavioral guarantees from past versions are still available. That is how extend only design is ultimately supposed to work: preserve the past but open future options that offer new behaviors and trade-offs concurrently.