Software Falsehoods: you can build it cheap, fast, and good - pick two

Price is an independent property of software quality and speed.

“You can build it cheap, fast, and good - pick two” is how the saying goes, referring to the inherent trade-offs in software development priorities. It makes intuitive sense but utterly fails in real-world applications. Two simple reasons why this correlation does not hold:

- Price is not realistically correlated to quality of outcomes and

- Price isn’t correlated to faster delivery times either.

Price is its own independent quality determined entirely by the buyer’s and seller’s perception of value.

As the Dan Luu article I linked to mentions - it’s fairly difficult even for experts within a given domain to accurately assess the market value of their own services (or someone else’s), hence why price discovery has to be tested continuously1.

But let’s take a closer look under the covers at the price vs. quality and price vs. speed relationships.

Price vs. Quality

If you wanted to formally falsify the price and quality correlation, the easiest way to do this is proof by counter example: how many times has a very expensive “senior” developer been brought onto your team, project, or organization and contributed nothing but total destruction of value? Or how about spending a lot of money on a hosted service, consulting firm, third party components - only to get exceedingly poor results in return?

Over-paying is common in the software industry because more often than not when we agree on price we’re agreeing based on reputation, not past results.

Organizational Fit

But it goes a bit further than that - say someone is a truly “great” software developer with a proven track record (i.e. worked successfully with other members of your team in the past;) you pay them a handsome premium to join your company; but your team is a bureaucratic BigCo hell-hole where the hours spent in meetings are 5x the hours spent delivering.

You’re not going to extract much value from this developer despite their quality because your organization isn’t designed to properly maximize value from talent. In BigCo-type environments, organizations operate to minimize tail risk - they’d actually be better off hiring mid-tier developers who have some experience and prefer not to rock any boats or make big changes.

The Qualities of “Quality Software”

This begs the question: what does software quality even look like? If you’re looking at the output of a software development lifecycle it’d be software that ships without any substantial bugs, is performant, well-documented, well-tested, a sufficiently good interface that satisfies the stakeholders, and is delivered within time and budget.

How many developers in your organization could write a complete user-story applicable to their current daily work from memory? How many could not only nail the real end-user acceptance criteria with perfect accuracy, but could also explain what the sources of complexity are for this user-story AND what are the best strategies for mitigating those? Could they describe which parts of the user story are likely to change and evolve in response to making first contact with real-users?

I recently did some consulting work for a manufacturing company. One of the attendees during our meetings was a veteran software developer who’d been with the company for almost thirty years. He wrote native drivers for some of their embedded systems and wrote a great portion of the WPF application that the company was trying to parallelize into a backend service using Akka.NET. He was one of the exceedingly rare developers who could, quickly and confidently, do all of this.

Very few software developers, even well-intentioned ones, grasp the most basic measure of quality: understanding what they’re building, why they’re building it, and who they’re building it for.

Measures of Quality

Then there’s the quantitative approach to quality - how do you measure it?

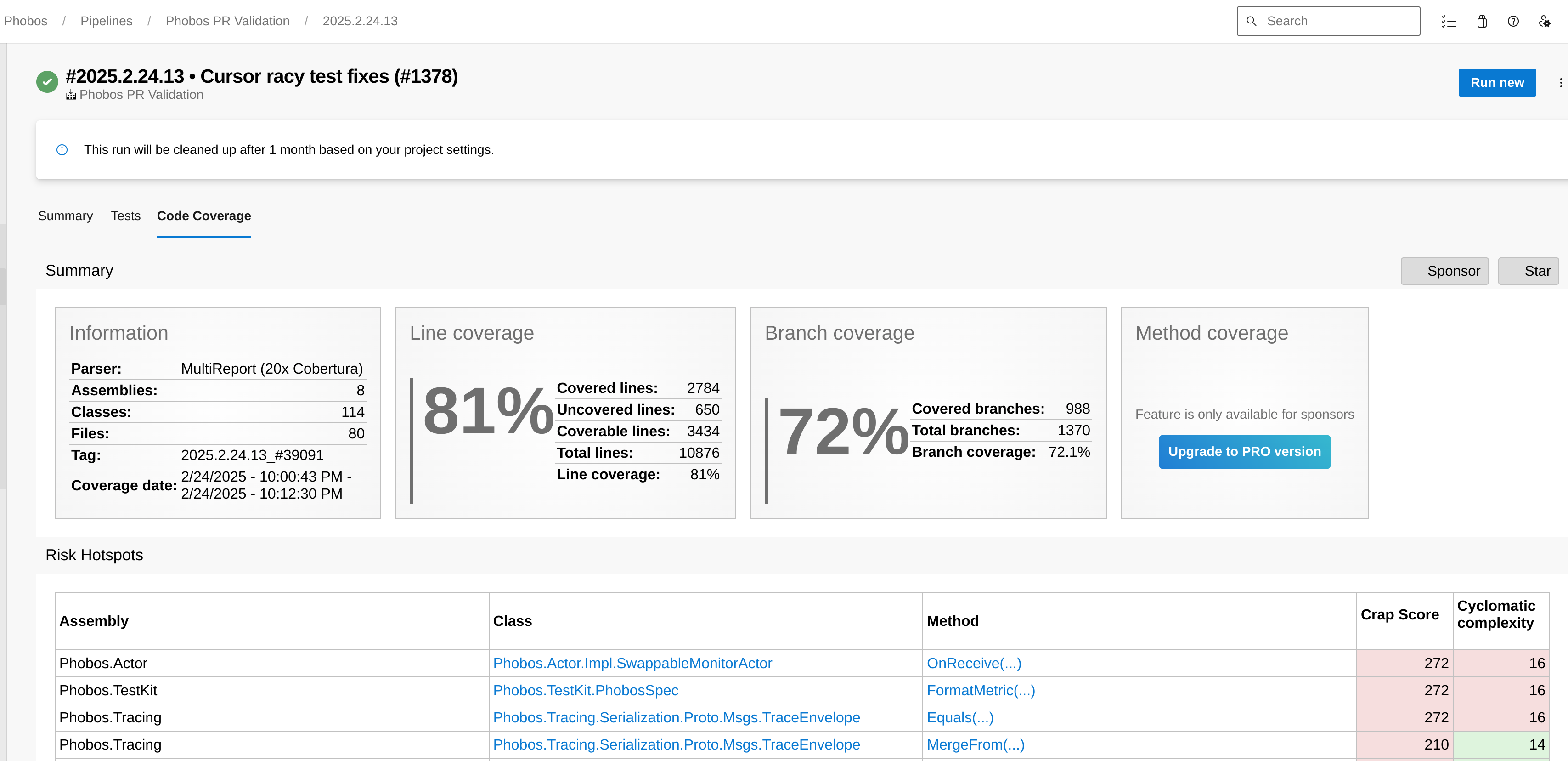

There’s a fair number of measurables implicitly described inside my generalization of “high quality software:” CRAP scores, bug lists, benchmarks, test results, and measured development velocity. How many organizations measure any of these consistently?

Very, very few - so how do organizations even know whether they’re getting a high-quality or low-quality result? Usually it’s going to be when the project misses a deadline, royally pisses off a customer, goes up in flames in a spectacular (and usually avoidable) production incident, or most commonly: when the project’s requirements inevitably change and the previously implemented structure of the program prevents this from happening.

In sum, when developers speak to “quality” of results they often have no idea what they’re talking about qualitatively and certainly not quantitatively.

In the Akka.NET project we try very hard to measure these and it’s challenging even when you want to do it - i.e. generating a CRAP score for our full project on every pull request would add another 20-30 minutes to our already long test execution times. If we did benchmarks on every PR that’d add another 90 minutes probably. All of this inhibits cycle-time, which is the next thing we’ll discuss.

Price vs. Speed

There are absolutely instances where you can trade money for speed:

- Paying AWS instead of building and configuring your own hosting infrastructure

- Buying a license for self-hosted APM tools like Seq

- Subscribing to AI code-gen tools like Cursor

- Buying better development tools such as JetBrains products

- Shameless plug: hiring a company like Petabridge to train your team on a new technology Akka.NET (instead of spending months doing it by trial-and-error)2

Here is what you’ll notice about all of these exchanges of cash for speed:

- These products sold by vendors are all highly generalized and / or commodified;

- The vendors, therefore, have generalizable solutions to these problems; and

- There’s no overt promise of quality outcomes either - DataDog might happily invoice you for five figures a month without necessarily providing you with actionable insights into your application’s behavior.

So here’s the catch: you can trade money in exchange for timely access to external organizations’ expertise in the form of products and services, but that’s only a tiny fraction of what constitutes delivering a software product to your own customers.

The nitty-gritty of software development is ultimately customization, all the way down: users, business cases, requirements, regulations, restrictions, and more.

Hiring More People

How do you accomplish all of that, with quality, quickly? The original saying posits that money is how you solve this problem: by hiring more people.

I’m not going to bother recapping The Mythical Man-Month here - I’m going to do something even lazier: recap a recap of The Mythical Man-Month by citing Brooks’s Law: “[a]dding manpower to a late software project makes it later.”

The thrust of this point is that scaling up team size also scales communication overhead - which is going to shift our time delivering / time talking about delivering ratio in the wrong direction, leading to later delivery. It takes extremely thoughtful organization design to overcome this, such as Jeff Bezos’ API-first mandate for Amazon3.

Hiring the ‘Right’ People

If software speed can’t be accomplished with more people, what about doing so with the “right” people? If we just start hiring more experienced software developers, surely they’ll be able to produce things faster than juniors or our current people?

To a point this may help - you can make some internal hires or bring aboard some consultants with relevant technology stack and domain expertise. But that invites two new potential issues:

- Domain training overhead - even if you hire someone within your industry with relevant expertise, they still have to be trained on your way of doing things and your requirements. Rinse and repeat for each hire, internal or external.

- Uncertainty - even if you get a solid senior developer with a good track record within your industry, there’s zero guarantee that they’re going to be able to move things along faster and more quickly than other developers on your team. It’s the quality problem all over again.

If you’re trying to improve your iteration speed / cycle time on software, “hire fast developers” is a hope-based strategy - and therefore, a loser.

Software Quality and Speed Are Methodology Problems

Like I’ve said from the beginning - price is correlated to value and value is very difficult to assess. This is why money is not a guaranteed cure for software delivery problems.

Software delivery quality and speed are ultimately methodology problems - personnel definitely plays a factor, but it’s 80% how we’re doing business and 20% who’s doing it.

Here’s a real correlation you can take home: software development teams that measure and focus on speed will inherently deliver higher quality software. There’s numerous studies4 from the continuous delivery universe that reach this same conclusion: the faster your team becomes at delivering software, the faster it will find and fix bugs, and therefore quality improves.

I’ll write a subsequent blog post on speed-improving development methodologies (CI/CD isn’t the whole story) for software teams - so please subscribe for that.

-

Pricing is all about finding local maxima and that’s very difficult to do even for a fairly small locality. ↩

-

You can learn more about what we offer at Petabridge here: https://petabridge.com/training/onsite-training/ ↩

-

https://konghq.com/blog/enterprise/api-mandate - this ultimately led to the creation of Amazon Web Services. ↩

-

“On the effects of continuous delivery on code quality: A case study in industry”, “Adoption and Adaptation of CI/CD Practices in Very Small Software Development Entities: A Systematic Literature Review” ↩