I had never heard of Michael Green before his now-infamous essay “Part 1: My Life Is a Lie - How a Broken Benchmark Quietly Broke America” went extremely viral on X.

Go read it. The short version: real poverty is closer to $140,000 than $31,000.

“The U.S. poverty line is calculated as three times the cost of a minimum food diet in 1963, adjusted for inflation.”

and

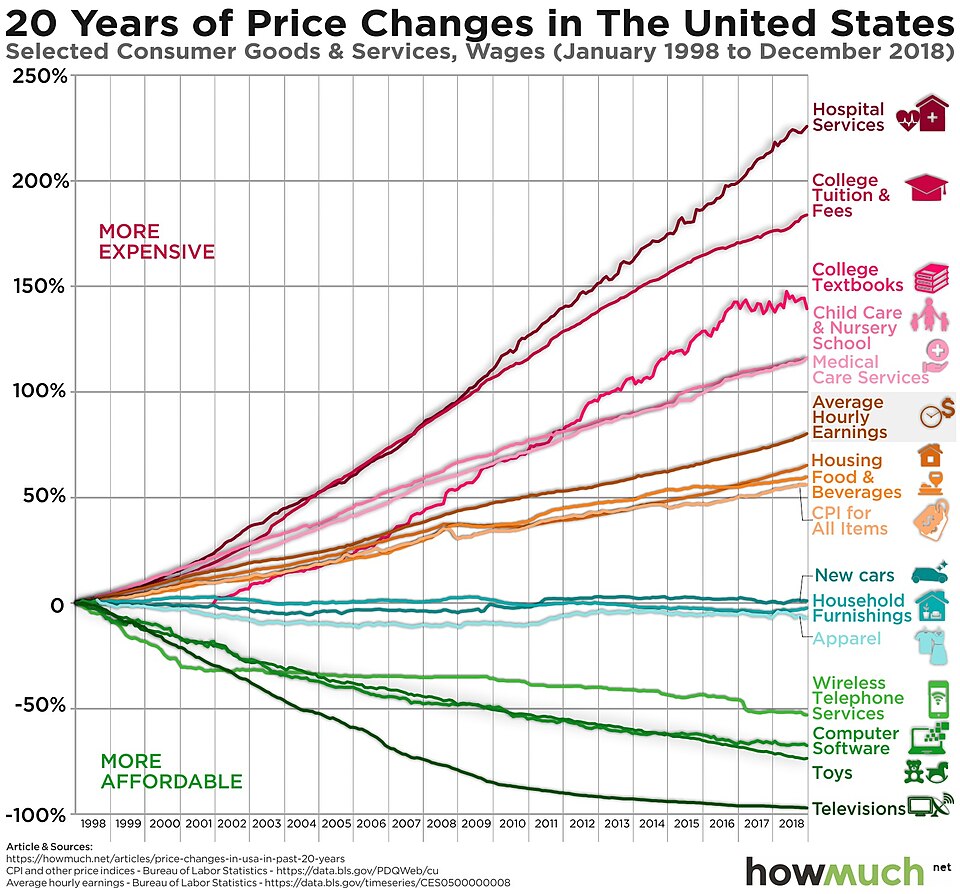

The composition of household spending transformed completely. In 2024, food-at-home is no longer 33% of household spending. For most families, it’s 5 to 7 percent.

Housing now consumes 35 to 45 percent. Healthcare takes 15 to 25 percent. Childcare, for families with young children, can eat 20 to 40 percent.

If you keep Orshansky’s logic—if you maintain her principle that poverty could be defined by the inverse of food’s budget share—but update the food share to reflect today’s reality, the multiplier is no longer three.

It becomes sixteen.

Which means if you measured income inadequacy today the way Orshansky measured it in 1963, the threshold for a family of four wouldn’t be $31,200.

It would be somewhere between $130,000 and $150,000.

And remember: Orshansky was only trying to define “too little.” She was identifying crisis, not sufficiency. If the crisis threshold—the floor below which families cannot function—is honestly updated to current spending patterns, it lands at $140,000.

This article resonated with me because I have had three children born since 2021 - well, technically, my third arrives in a week.

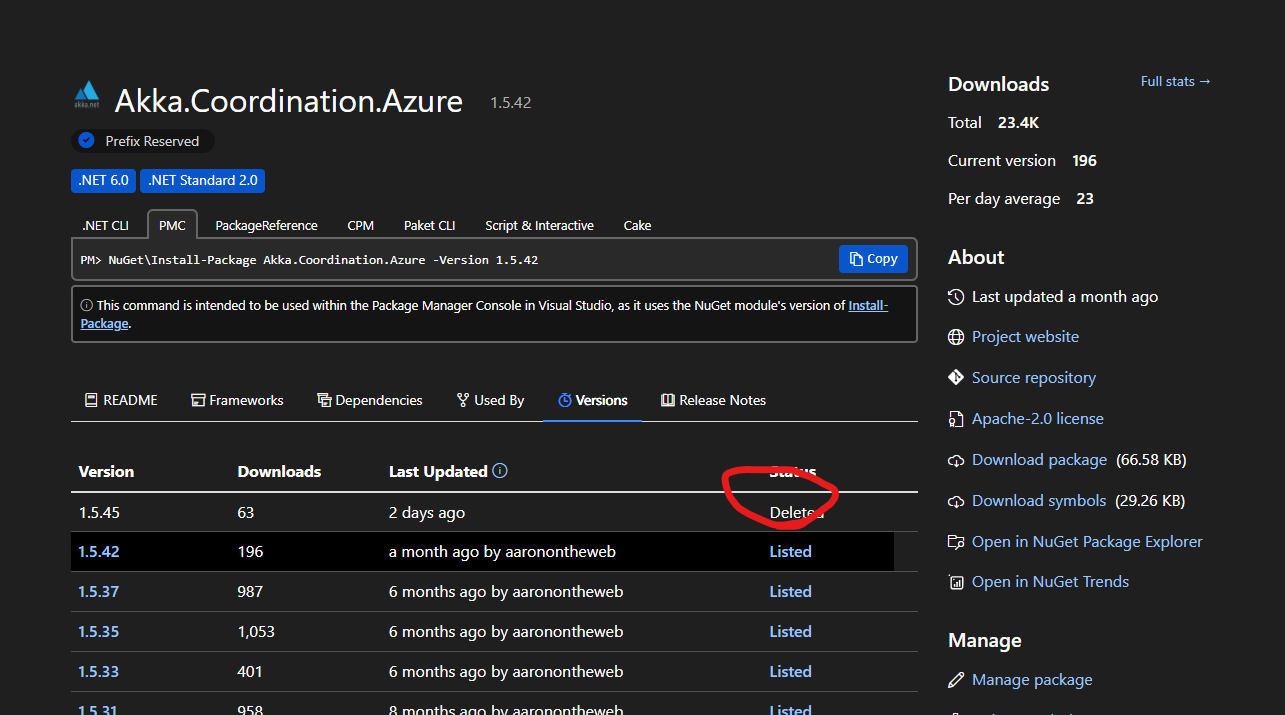

I have spent $30,000, $35,000, and now $40,000 for each child delivered.

That is my full out-of-pocket cash-paid cost as a self-employed entrepreneur who runs a small business. I do not have a corporate daddy to share costs with me. This is totally unsustainable and insane, yet every central bank-worshipping think tank economist who attacked Green had nothing to say when I asked them to justify my socialized cost for the public good of bringing a new tax-payer into this world.

America has a cost of living crisis; it’s not being taken seriously by “serious” economists; and the ongoing failure to address it will lead to political, social, and economic calamity.